Hello everyone,

I created this new topic to share some " basic scripts" to compress videos already on your PC.

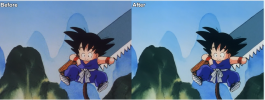

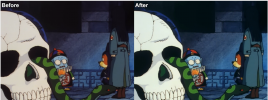

The scripts are mostly for Anime/Cartoon but can also be used for normal Movies and Series.

Kindly note that you might need to adjust certain settings of the scripts to your preference, the scripts provided here are for your reference.

Please be advised that all scripts use HEVC - 10 bit - if possible. Scripts for H.264 might follow.

This topic is mainly for Intel iGPU dGPU and Nvidia GPUs.

Due to AMD drivers and related encoding issues, please take caution when using them.

At this point, I would like to thank

@0x0x0x0x0 providing the idea with the intel "high" CQP settings and for Intel encoding testing.

@cartman0208 and @DeepSpace for testing some of the encodings.

@Ch3vr0n for providing NVencC hardware readouts of the RTX 4 series.

Let's proceed

Needed for Intel:

Rigayas Qsvcenc and latest drivers as well as a capable Intel iGPU or dGPU

The latest version can be found here:

Please download and extract into a folder of your choice and remember the location.

Needed for Nvidia:

Rigayas NVencC and latest drivers as well as a capable Nvidia GPU

The latest version can be found here:

Please download and extract into a folder of your choice and remember the location.

Needed for AMD:

Rigayas VCEEnc and latest drivers as well as a capable AMD GPU

The latest version can be found here:

Please download and extract into a folder of your choice and remember the location.

For all scripts, please copy the text 1:1 and only change things for which you know what they do, or if you like to play with different settings.

Create a new TXT file for each script in the same folder as the extracted encoder.

For example, if you extracted QSVEncC to "D:\Encoder\QSVEncC\" the script should be saved there too.

Give each script a different name.

How to run the scripts?

After all the scripts you need have been stored in the folder and with separate naming, you can either create a batch file or run the command from the command line.

Run from the command line:

Go into the folder with the videos you want to convert and open a command line, ensure that the output folder exists and adjust the below example accordingly

For a batch file:

Create a new text document.

Rename it with a .bat extension, e.g., EncodeVideosWithSubtitles.bat.

Right-click on the .bat file and select 'Edit', which should open the file in Notepad or another text editor.

Paste the following script:

%~dp0 sets the current directory to where the batch file resides.

In a batch script, you must use double per cent signs %% instead of a single % for loop variables.

The pause command at the end will keep the command window open after processing so you can see any errors or messages.

To use the script:

Place the .bat file in the directory containing your MP4 videos and subtitle files.

Double-click the .bat file to run it.

The script will process each MP4 file, encode it using the settings in example.txt, and mux in the subtitles, saving the result in the F:\Re-encode directory.

Please note that you need to adjust the subtitle info accordingly to the subtitle you have in your folder.

You can also force a subtitle and create metadata for example:

Disclaimer:

While I have dedicated a significant amount of time and effort to test everything detailed in this post, I cannot guarantee that there are no mistakes.

Human error is always a possibility, and this guide is no exception.

If you find any inaccuracies or errors, please kindly bring them to my attention.

I'm committed to rectifying any issues based on your feedback.

I genuinely appreciate constructive comments and suggestions that can help improve this guide further.

I created this new topic to share some " basic scripts" to compress videos already on your PC.

The scripts are mostly for Anime/Cartoon but can also be used for normal Movies and Series.

Kindly note that you might need to adjust certain settings of the scripts to your preference, the scripts provided here are for your reference.

Please be advised that all scripts use HEVC - 10 bit - if possible. Scripts for H.264 might follow.

This topic is mainly for Intel iGPU dGPU and Nvidia GPUs.

Due to AMD drivers and related encoding issues, please take caution when using them.

At this point, I would like to thank

@0x0x0x0x0 providing the idea with the intel "high" CQP settings and for Intel encoding testing.

@cartman0208 and @DeepSpace for testing some of the encodings.

@Ch3vr0n for providing NVencC hardware readouts of the RTX 4 series.

Let's proceed

Needed for Intel:

Rigayas Qsvcenc and latest drivers as well as a capable Intel iGPU or dGPU

The latest version can be found here:

Code:

https://github.com/rigaya/QSVEnc/releasesNeeded for Nvidia:

Rigayas NVencC and latest drivers as well as a capable Nvidia GPU

The latest version can be found here:

Code:

https://github.com/rigaya/NVEnc/releasesNeeded for AMD:

Rigayas VCEEnc and latest drivers as well as a capable AMD GPU

The latest version can be found here:

Code:

https://github.com/rigaya/VCEEnc/releasesFor all scripts, please copy the text 1:1 and only change things for which you know what they do, or if you like to play with different settings.

Create a new TXT file for each script in the same folder as the extracted encoder.

For example, if you extracted QSVEncC to "D:\Encoder\QSVEncC\" the script should be saved there too.

Give each script a different name.

How to run the scripts?

After all the scripts you need have been stored in the folder and with separate naming, you can either create a batch file or run the command from the command line.

Run from the command line:

Go into the folder with the videos you want to convert and open a command line, ensure that the output folder exists and adjust the below example accordingly

Code:

for %i in (*.mp4) do "E:\rigaya\NVEncC\NVEncC64.exe" -i "%i" --sub-source "%~dpni.srt" --option-file "E:\rigaya\NVEncC\example.txt" -o "F:\Re-encode\%~ni.mkv"For a batch file:

Create a new text document.

Rename it with a .bat extension, e.g., EncodeVideosWithSubtitles.bat.

Right-click on the .bat file and select 'Edit', which should open the file in Notepad or another text editor.

Paste the following script:

Code:

@echo off

cd %~dp0

for %%i in (*.mp4) do (

"E:\rigaya\NVEncC\NVEncC64.exe" -i "%%i" --sub-source "%%~dpni.en-us.srt" --option-file "E:\rigaya\NVEncC\example.txt" -o "F:\REencode\%%~ni.mkv"

)

pause%~dp0 sets the current directory to where the batch file resides.

In a batch script, you must use double per cent signs %% instead of a single % for loop variables.

The pause command at the end will keep the command window open after processing so you can see any errors or messages.

To use the script:

Place the .bat file in the directory containing your MP4 videos and subtitle files.

Double-click the .bat file to run it.

The script will process each MP4 file, encode it using the settings in example.txt, and mux in the subtitles, saving the result in the F:\Re-encode directory.

Please note that you need to adjust the subtitle info accordingly to the subtitle you have in your folder.

You can also force a subtitle and create metadata for example:

Code:

--sub-source "%%~dpni.en-us.srt:disposition=forced;metadata=language=eng"Disclaimer:

While I have dedicated a significant amount of time and effort to test everything detailed in this post, I cannot guarantee that there are no mistakes.

Human error is always a possibility, and this guide is no exception.

If you find any inaccuracies or errors, please kindly bring them to my attention.

I'm committed to rectifying any issues based on your feedback.

I genuinely appreciate constructive comments and suggestions that can help improve this guide further.